Overview

A previous blog post dealt with setting up the Kuma Service Mesh to manage to web server machines running in AWS. This post will add Kong API gateway to the mix and start to resemble a modern distributed application.

Kong is an API gateway which allows administrators to secure and serve existing APIs from a single system with enhanced monitoring and provide those APIs with added or new functionality from plugins to the Kong platform.

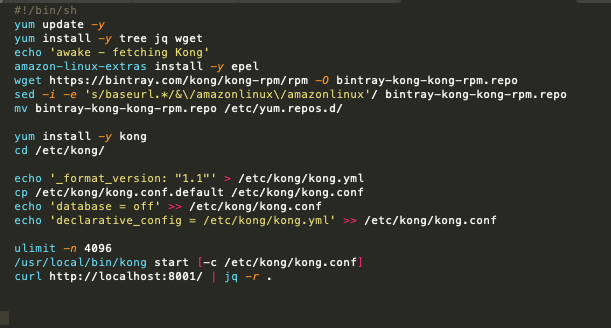

As in the Kuma post I will use the installation materials and methods from the Install Kong page at konghq.com and put those commands into an AWS EC2 launch template for fast deployment when I want to test new plugins or investigate Kong’s features.

Launch template and Userdata.sh

EC2 allows us to set up templates of machine specifications, subnets, tags which we can use to quickly launch one or more EC2 instances. A very handy tool in the launch template system is the ability to include the userdata shell script. This script runs as root during boot and lets us update and configure things on the fly.

- The package manager runs update and also installs a few handy tools (jq, tree, etc)

- The Kong repo is downloaded, configured for AWS linux and made available to yum

- yum installs Kong

- The script configures Kong to run from yml config file without a DB

- ulimit is increased for this machine

- Finally Kong is started and the script curls Kong on 8001 for the benefit of log output

Ready for Launch

Having set this up in the console I need to now launch a Kong gateway and check to see if it is working. This launch was also a reminder for me to check whether my test NACL and SG set ups in my VPC are still valid, and they are not! Since my last blog my IP address has changed so I need to update the INBOUND and OUTBOUND sections of the NACL with my current IP address, as well as the INBOUND portion of the SG in the same way. Mutatis mutandis, I am now able to SSH into the Kong machine and verify that the setup has worked.

What is installed and running?

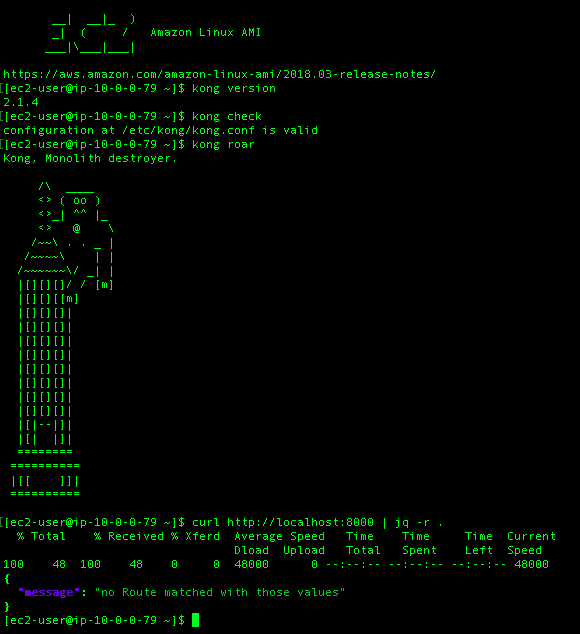

To see that the installation was successful I will ask Kong for the version, ask Kong to check it’s configuration file, then ask Kong to ‘roar’. The final check with curl shows me that Kong is indeed running and listening for connections on port 8000 which is the default port for HTTP traffic. Kong is also helpfully telling us that we need to add some configuration to the gateway to route some requests for us.

In the interest of simplicity I will set up an S3 bucket to function as a web page and have Kong route requests to that public bucket.

S3 bucket as a webserver

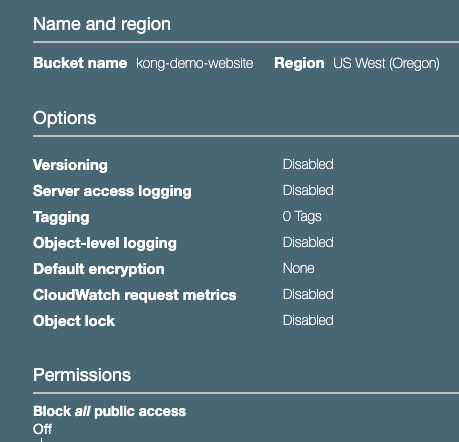

Navigate to the S3 console and create a new bucket for our site. Note that the bucket has “Block Public Access” set to off.

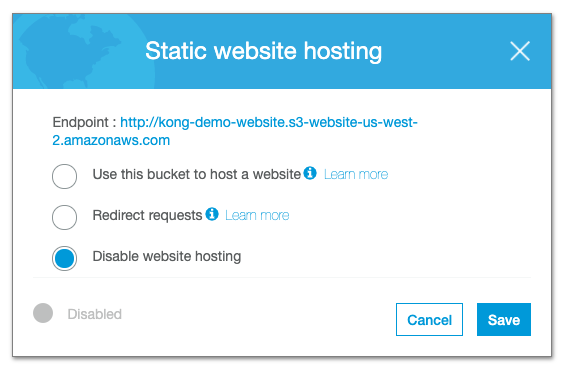

Once created, I have to edit the properties of the bucket and turn on “Static Website Hosting” and copy the DNS endpoint for use in our Kong configuration.

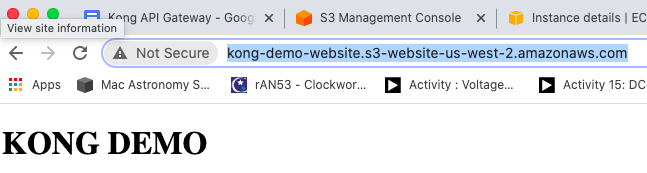

With this enabled, I now have to indicate the index and error files for the web server, and upload html for each. I have used a simple H1 heading in each which allows me to see the files are being served. In the upload settings I had to allow public permission to view both objects.

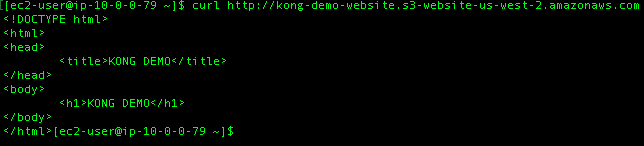

With that complete, I just need to modify Kong to route requests to that bucket, and make changes to the NACL and SG such that the Kong server can get out to the internet. In the previous blog I set up my VPC with an internet access gateway and a route table entry. I can use curl to verify the Kong server can see it.

All good.

Set up the route using Kongs declarative configuration

When I set up this Kong machine I elected not to use a database. This means I cannot use the API for adding route configurations. Instead I have to initialise a YAML file to store the routes and set up that path in the config file.

$>kong config init

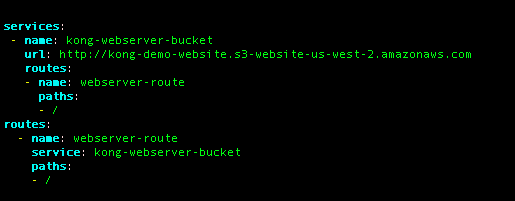

This command creates the kong.yml file, wherein I will add the declaration of the Service and the Route.

Now I need to reload the configuration and test the route to the bucket.

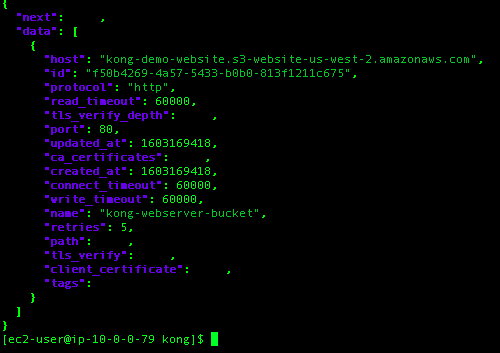

We can check the service is listed using the Admin API.

$> curl http://localhost:8001/services | jq -r .

We get a similar return when we GET the routes list.

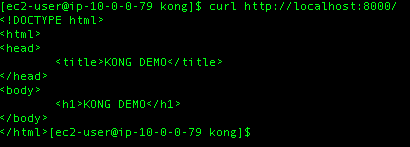

Now when I curl the / path Kong will proxy that request to the S3 bucket and return the result.

It works!

Summary

In ten or so minutes we have been able to create a templated Kong server development environment and Kongfigure® the gateway to serve our bucket web server on port 8000 of the local machine. This is a very simple example, but it is clear that we could configure any number of services and access those services by declaring Services and Routes in Kong. We can go further and configure the machine with a DNS name and build an application using Kong as the gateway.